Podcast Summary

Section 230: Protecting Websites from Liability for User Content: Section 230 of the Communications Decency Act shields websites from liability for their users' illegal content and moderation decisions, ensuring the Internet's functionality and fostering diverse online communities

Section 230 of the Communications Decency Act is a crucial law that allows interactive websites to avoid liability for their users' content and for their content moderation decisions. This law, which was passed in 1996 and has survived numerous legal challenges, places the liability for illegal content on the person who posted it rather than the platform. Additionally, it protects websites from lawsuits if they choose to moderate or remove content. This was a response to a series of lawsuits in the 1990s where websites were held liable for defamatory statements made on their platforms because they had attempted to moderate the content. Section 230 is essential for the functioning of the modern Internet and allows for the existence of various online communities and platforms.

Section 230: Protecting Interactive Computer Services Hosting Third-Party Content: Section 230 of the Communications Decency Act protects interactive computer services, including social media platforms, from liability for third-party content, allowing them to moderate and present content as they see fit, promoting free speech online.

Section 230 of the Communications Decency Act has been a crucial factor in the development and thriving of the modern internet, allowing websites to moderate content in good faith and present it in a way that aligns with their goals. This law, which applies to both user-generated and third-party content, does not distinguish between "publishers" and "platforms." Instead, it protects interactive computer services that host third-party content. The recent addition of a fact-check feature on Twitter, which links to third-party news sites, is an example of how this law functions in practice. Despite some debates about the role of platforms versus publishers, the law does not make such distinctions and instead focuses on protecting interactive computer services that host third-party content. Ultimately, Section 230 is a significant gift to free speech for everyone, enabling a wide range of online content and activity.

Twitter adding context and limiting interactions on political content: Twitter can add fact checks and limit interactions on political content under Section 230, balancing free speech and terms of service.

Social media platforms like Twitter are navigating a complex line between enforcing their terms of service and upholding free speech principles, particularly when it comes to content from politicians. In the case of Twitter and the George Floyd protests, the platform added a fact check label and limited interactions on a tweet from the president that violated their terms of service around violent speech. This action was allowed under Section 230, as it didn't remove the content or take down the speech, but rather added more context and limited interactions. The first amendment protects individuals from government infringement on speech, not private companies like Twitter. The distinction between platforms and publishers continues to be debated, but the ability for companies to add context and limit interactions on potentially harmful content is a crucial aspect of the ongoing conversation.

Limited applicability of public utility and public square analogies to social media platforms: Traditional public utilities and public square analogies have their limitations when applied to social media platforms as they are not one-to-one replaceable and social media companies cannot be forced to act as public forums without infringing on free speech rights.

While there are some interesting parallels between the current debate around content moderation on social media platforms and the historical context of public utilities and the concept of a public square, the applicability of these analogies is limited. The Internet and edge providers like Google, YouTube, Twitter, and Facebook do not meet the requirements of traditional public utilities because they are not commodified services that are one-to-one replaceable. Instead, they offer unique features and experiences that cannot be easily switched out. Furthermore, the argument that social media platforms are public forums and cannot engage in content moderation has consistently been rejected in courts. While these debates are important to understand, it's crucial to recognize their limitations and consider the unique characteristics of digital platforms.

Courts have ruled that social media platforms aren't public squares or state actors: Courts have consistently found that social media companies don't have the same responsibilities as traditional public spaces or government entities regarding free speech.

The concept of public squares and state action only applies to a very limited set of circumstances where private companies are replacing functions traditionally done by the government. Cases like Pruneyard, Packingham, and Manhattan News Network have set precedents that social media platforms like Twitter, Facebook, and YouTube do not qualify as public squares or state actors. The recent executive order on social media regulation may generate hype, but its constitutionality remains uncertain, as previous attempts to regulate social media in this way have been deemed unconstitutional by various agencies.

FCC has no authority to regulate websites: The recent executive order asking the FCC to regulate websites goes against the law and decades of case law, and is unlikely to change the legal status of websites under FCC jurisdiction.

The recent executive order asking the Federal Communications Commission (FCC) to reinterpret Section 230 of the Communications Decency Act (CDA) is concerning because it goes against both the law and decades of case law, which have established that the FCC has no authority to regulate websites. The FCC can make rulemaking requests, but it's an independent agency and the president cannot instruct it to do something. The FCC can create nuisance through rulemaking and enforcement, but it cannot regulate websites as they are not within its jurisdiction. The executive order misinterprets CDA 230 by applying the limitations on moderation ability to the part about not being responsible for third-party content. This misinterpretation could lead to a lengthy and distracting rulemaking process, but ultimately, it is unlikely to change the legal status of websites under FCC jurisdiction.

Interpreting Section 230 of the CDA Confusingly: The recent executive order proposes a confusing interpretation of Section 230 of the CDA, potentially leading to misuse and legal challenges, including the linking of encryption debates through the Earn It Act.

The recent executive order regarding Section 230 of the Communications Decency Act (CDA) proposes a confusing and potentially bad faith interpretation of the law. The order suggests that the FCC should examine whether the provisions that protect websites from liability for their users' libelous or other harmful content are being conflated with the good faith aspect of moderating content. Additionally, the order instructs the attorney general to draft a state law to reinterpret CDA 230 in a way that diminishes its power, despite the fact that the law does not limit federal criminal liability for sites hosting illegal content. This could lead to confusion and potential misuse of the law. The encryption debate, which involves conflicting views on end-to-end encryption and 230 protections, is also being linked to this issue through the Earn It Act, which could create significant legal and practical challenges.

Impact of Executive Order on Social Media Companies' Advertising: The executive order's impact on social media companies' advertising is limited due to the FCC's jurisdiction and the First Amendment, but potential restrictions on government advertising spending and impact on data collection for agencies could be significant.

Social media companies like Twitter, Facebook, and YouTube may face increased scrutiny and potential restrictions from the government, but the executive order's impact is limited due to the FCC's jurisdiction and the First Amendment. The order's threat to limit government advertising spending on these platforms is minimal, as federal procurement records show that it's a small portion of their revenues. However, the potential impact on agencies, such as the Census Bureau, that use social media advertising to reach a wider audience could be significant, potentially limiting their ability to collect data required by the Constitution. Additionally, Congress could introduce legislation to address this issue, but it would likely face First Amendment challenges and may not be successful. Overall, the executive order represents a complex issue with significant implications for free speech, government spending, and data collection.

The complexity of content moderation: Mike's theorem shows that content moderation is subjective and mistakes will be made, but it's crucial to continue the conversation and find a balance between free speech and safety.

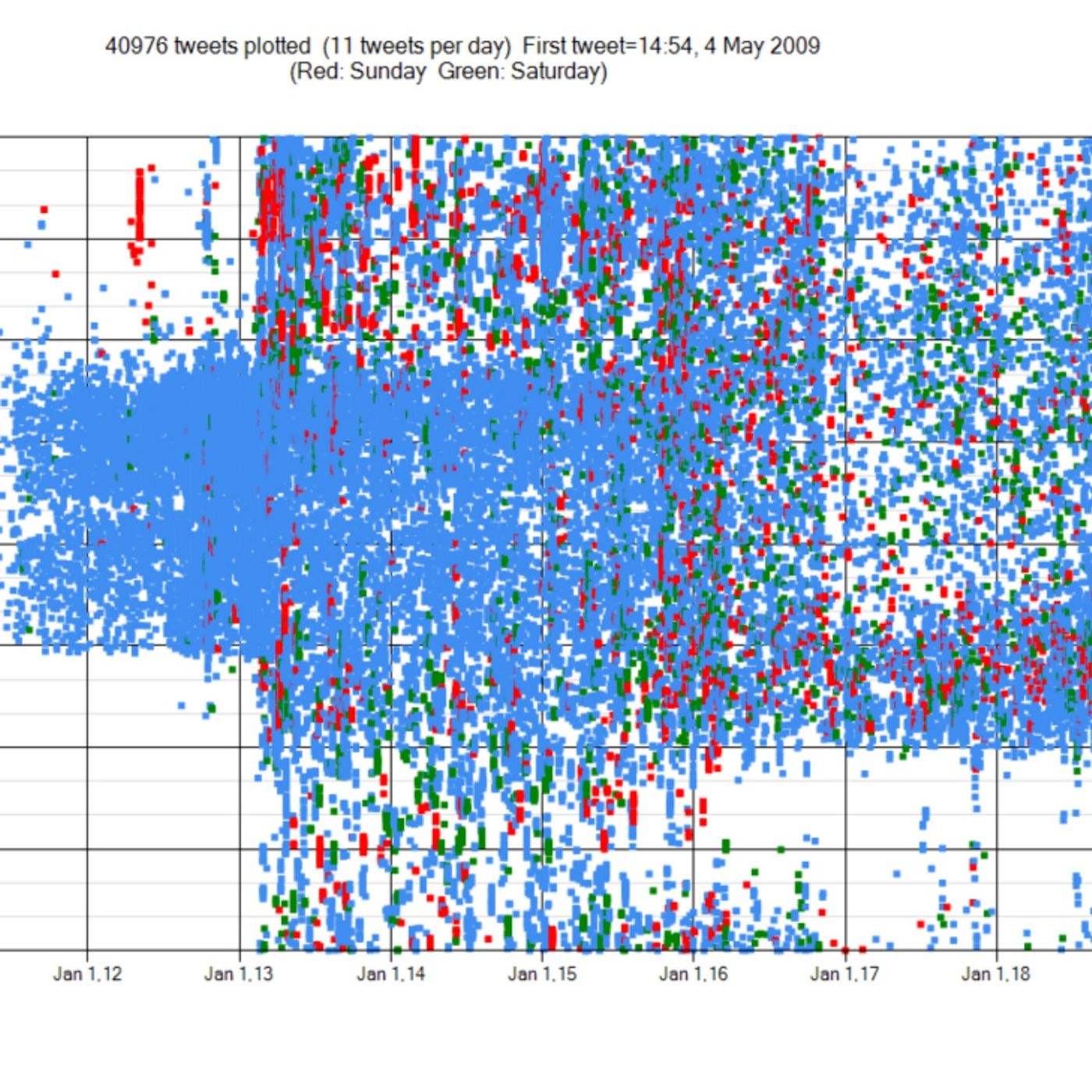

Content moderation on the internet, as regulated by Section 230 of the Communications Decency Act, is a complex and subjective issue with no easy solutions. Mike's "Masnick impossibility theorem" highlights the fact that any decision made about content moderation will upset someone, and the vast amount of content being generated daily ensures that mistakes will be made. Despite these challenges, it's important to continue the conversation around content moderation and its role in protecting users and maintaining a healthy online community. Solutions may include a combination of human moderation, AI technology, and clearer guidelines for content policies. Ultimately, finding a balance between free speech and safety will require ongoing dialogue and collaboration between tech companies, policymakers, and users.

Section 230 enables online experimentation and innovation: Section 230's protection for websites allows for user-generated content and community moderation, fostering innovation and competition, but potential regulations could unintentionally limit smaller online services.

Section 230 of the Communications Decency Act plays a crucial role in enabling diverse experimentation and innovation across various online platforms, including social media sites, blogs, and search engines. This protection extends to all websites, regardless of their size or popularity, and allows for user-generated content and community moderation. However, proposed regulations, such as the recent executive order, could inadvertently limit the growth of new and smaller online services by making compliance too costly and complex. It's essential to remember that regulations, like GDPR, have unintended consequences, often benefiting larger companies at the expense of smaller players. Therefore, striking a balance between necessary regulations and maintaining the openness and accessibility of the internet is crucial for fostering innovation and competition.

Push to change internet moderation rules: The ongoing debate and experimentation with various moderation approaches in crypto and decentralized systems is crucial to finding the best solutions for different communities and purposes, recognizing that there isn't a one-size-fits-all approach.

There's a growing push to change the rules of internet moderation, with the recent executive order being just one example. While the order itself may not bring about significant changes, the broader trend of legislation targeting content moderation is a cause for concern. This could potentially limit the types of moderation approaches available and impact freedom of speech online. It's crucial to allow for experimentation with various governance models in the crypto and decentralized systems space, as different approaches will work best for different communities and purposes. The ongoing debate and experimentation are essential to finding the best solutions for various services and recognizing that there isn't a one-size-fits-all approach.

Effective Communication: Active Listening, Empathy, and Clarity: Effective communication involves active listening, empathy, clarity, and nonverbal cues to build stronger relationships, improve collaboration, and enhance productivity.

Effective communication is key to building strong relationships, both in personal and professional settings. During our discussion, we explored various aspects of communication, including active listening, empathy, and clarity. Active listening involves fully concentrating on the speaker, understanding their perspective, and responding appropriately. Empathy, on the other hand, involves putting oneself in someone else's shoes and understanding their emotions. Clarity is essential to ensure that messages are conveyed effectively and that there is no room for misinterpretation. Furthermore, we discussed the importance of body language and tone in communication, as they can often convey more than words alone. In conclusion, effective communication requires a combination of active listening, empathy, clarity, and nonverbal cues. By mastering these skills, we can build stronger relationships, improve collaboration, and enhance overall productivity.